Is AI as capable as humans? Here's how far artificial intelligence has come.

Photo illustration by Elizabeth Ciano // Stacker // Shutterstock

Is AI as capable as humans? Here’s how far artificial intelligence has come.

A digital illustration of an Apollo statue and three colorful variations.

In early 2021, the internet became transfixed by pictures of “avocado armchairs.”

Generated by OpenAI’s DALL-E artificial intelligence system, the images appeared on the screen after the simplest of prompts: a human user typing “avocado armchair.” The results were surreal, shockingly realistic, and marked a turning point for AI. Despite the unusual request, DALL-E had no problem conjuring up images that fit the bill.

Three years later, AI continues to improve. Verbit used data from academic research to see just how far AI is progressing.

Today’s AI can produce images that are virtually impossible to distinguish from photographs or human-created drawings, raising reasonable concerns. Artists worry about their economic futures, for one, while the proliferation of artificially generated images and videos of real people has caused lawmakers to start drafting bills to combat nonconsensual “deepfakes.”

Image generation is just one area in which AI use is exploding. Computers have made huge strides in other domains, from text generation and coding to creating music. Nowhere is AI more impressive than with its speed: Whether writing a song, producing artwork, or penning an email, humans simply can’t compete with the processing speed of AI. In other areas, AI still falls short. Empathy, the depth of human emotion, self-awareness, and creativity are all areas where many might hope AI never catches up.

![]()

Verbit

AI is improving rapidly

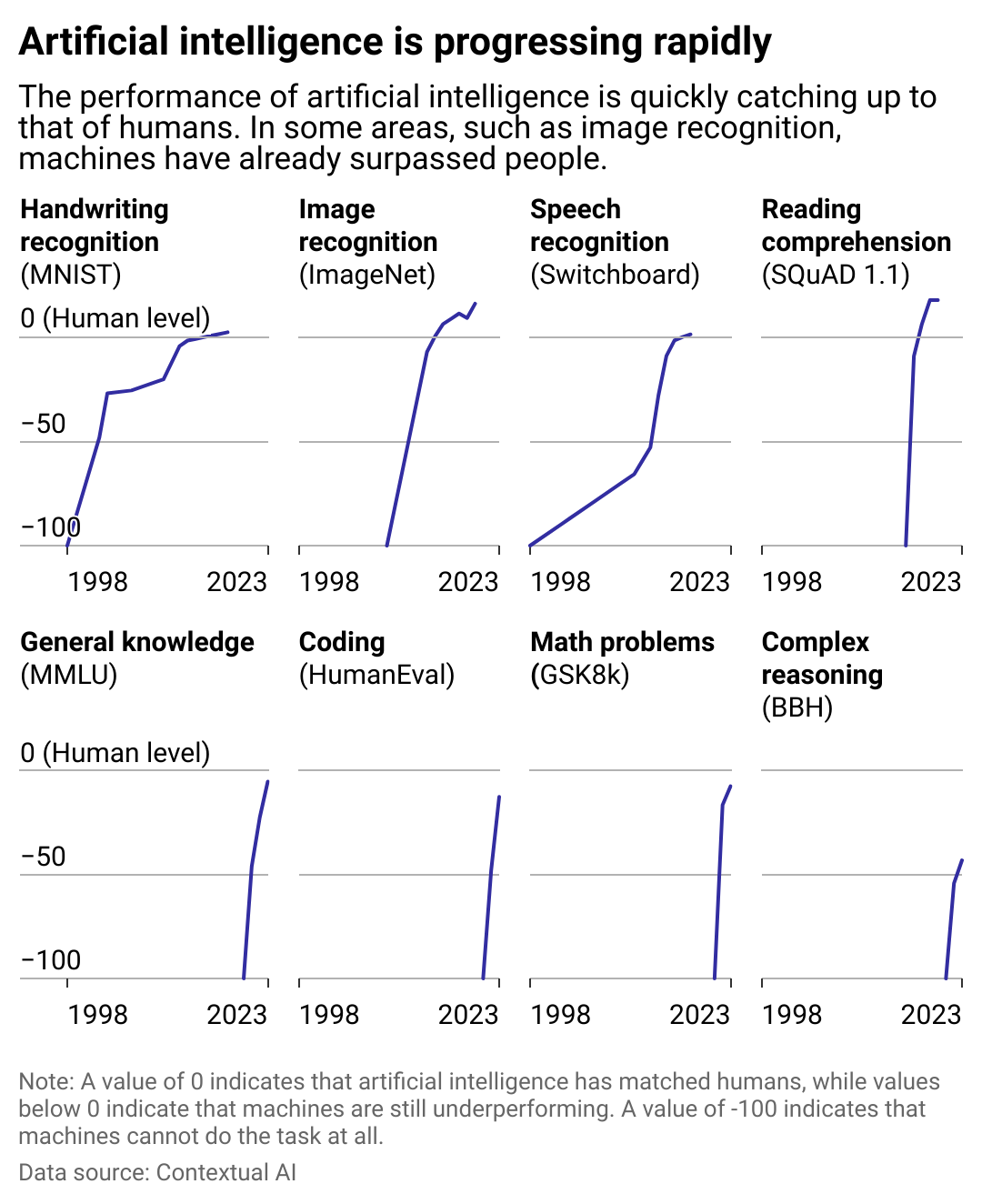

A series of line charts showing the performance of AI systems versus humans in various domains from 1998 to 2023. AI has caught up to people in handwriting and recognition, but still lags behind when it comes to coding and complex reasoning.

One way to track the development of AI systems is to see how well the tech has performed on benchmark tests over time. Researchers at Contextual AI tracked the performance of several AI systems on a diverse group of tasks. The data shows remarkable improvements in abilities in recent years.

AI systems have come to surpass humans on tasks such as image and speech recognition and even reading comprehension. The numbers also highlight where machines still lag behind people when it comes to areas such as reasoning and computer programming.

Another way to measure AI performance is to see how well it does on academic exams. One experiment, conducted by researchers at the University of Minnesota Law School, saw researchers give ChatGPT (then using its GPT-3.5 system) multiple choice and essay questions, then blindly grade the responses along with exams from real students. Researchers found that the AI scored a C+, enough to pass the class.

A different group of researchers examined how ChatGPT performs on the bar exam. GPT-3.5 failed, scoring only at the 10th percentile. Meanwhile, GPT-4, a newer and more powerful AI model, fared much better on the exam, scoring in the 90th percentile.

But AI is still not quite human

Any person who could perform as well as AI on these benchmarks would look like a genius. Yet despite strong performance across all of these metrics, modern AI systems are still lacking in a number of key ways compared to humans.

One obvious shortcoming of current AI systems is the so-called “hallucination problem,” where the system confidently fabricates false facts backed by imaginary sources. Humans are also prone to making up facts, but are generally aware if that’s the case.

Another serious shortcoming of currently existing AI systems is limited memories. They can have a hard time processing a large text source, such as a long book, and can become seemingly less intelligent in extended conversations. They also cannot generally remember things said in other discussions, which hampers their learning ability.

Perhaps the biggest weakness current AI systems have is their inability to reason. “Large language models” such as ChatGPT are built to predict the next word in a sequence. They do not understand complex reasoning nearly as well as humans do. This limits their ability to work on complicated problems requiring multiple steps.

Science fiction often portrays robots as exceptionally skilled at logic, but poor at anything that involves the subjective, such as creating art. Yet, as of 2024, at least, the very opposite seems true of AI in the real world.

Story editing by Nicole Caldwell. Copy editing by Kristen Wegrzyn.

This story originally appeared on Verbit and was produced and

distributed in partnership with Stacker Studio.