ChatGPT is landing kids in the principal's office, but not because of cheating

Ground Picture / Shutterstock

ChatGPT is landing kids in the principal’s office, but not because of cheating

Focused Asian school boy using digital tablet at class in classroom.

Ever since ChatGPT burst onto the scene last year, a heated debate has centered on its potential benefits and pitfalls for students. As educators worry students could use artificial intelligence tools to cheat, The 74 looks into a new survey that makes clear its impact on young people: They’re getting into trouble.

Half of teachers say they know a student at their school who was disciplined or faced negative consequences for using — or being accused of using — generative artificial intelligence like ChatGPT to complete a classroom assignment, according to survey results released in September by the Center for Democracy and Technology, a nonprofit think tank focused on digital rights and expression. The proportion was even higher, at 58%, for those who teach special education.

Cheating concerns were clear, with survey results showing that teachers have grown suspicious of their students. Nearly two-thirds of teachers said that generative AI has made them “more distrustful” of students and 90% said they suspect kids are using the tools to complete assignments. Yet students themselves who completed the anonymous survey said they rarely use ChatGPT to cheat, but are turning to it for help with personal problems.

“The difference between the hype cycle of what people are talking about with generative AI and what students are actually doing, there seems to be a pretty big difference,” said Elizabeth Laird, the group’s director of equity in civic technology. “And one that, I think, can create an unnecessarily adversarial relationship between teachers and students.”

Indeed, 58% of students, and 72% of those in special education, said they’ve used generative AI during the 2022-23 academic year, just not primarily for the reasons that teachers fear most. Among youth who completed the nationally representative survey, just 23% said they used it for academic purposes and 19% said they’ve used the tools to help them write and submit a paper. Instead, 29% reported having used it to deal with anxiety or mental health issues, 22% for issues with friends and 16% for family conflicts.

![]()

The Center for Democracy and Technology

One challenge has been a dearth of training and guidance on how to deal with AI

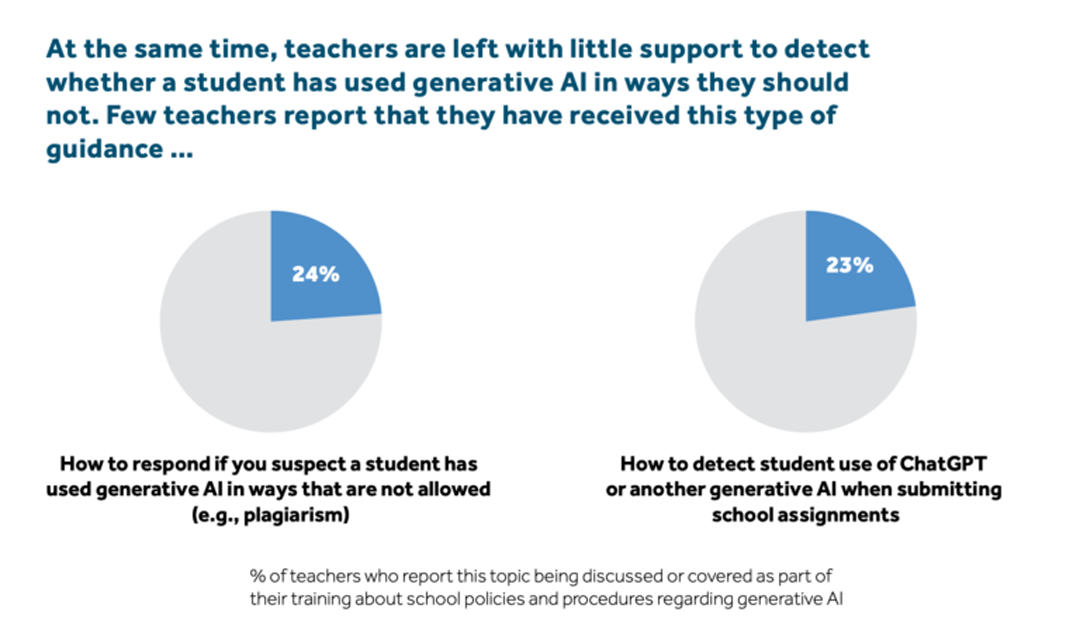

A pair of pie charts showing survey results on what percentage of polled teachers felt they had been trained to deal with AI

Part of the disconnect dividing teachers and students, researchers found, may come down to gray areas. Just 40% of parents said they or their child were given guidance on ways they can use generative AI without running afoul of school rules. Only 24% of teachers say they’ve been trained on how to respond if they suspect a student used generative AI to cheat.

The results on ChatGPT’s educational impacts were included in the Center for Democracy and Technology’s broader annual survey analyzing the privacy and civil rights concerns of teachers, students, and parents as tech, including artificial intelligence, becomes increasingly engrained in classroom instruction. Beyond generative AI, researchers observed a sharp uptick in digital privacy concerns among students and parents over the last year.

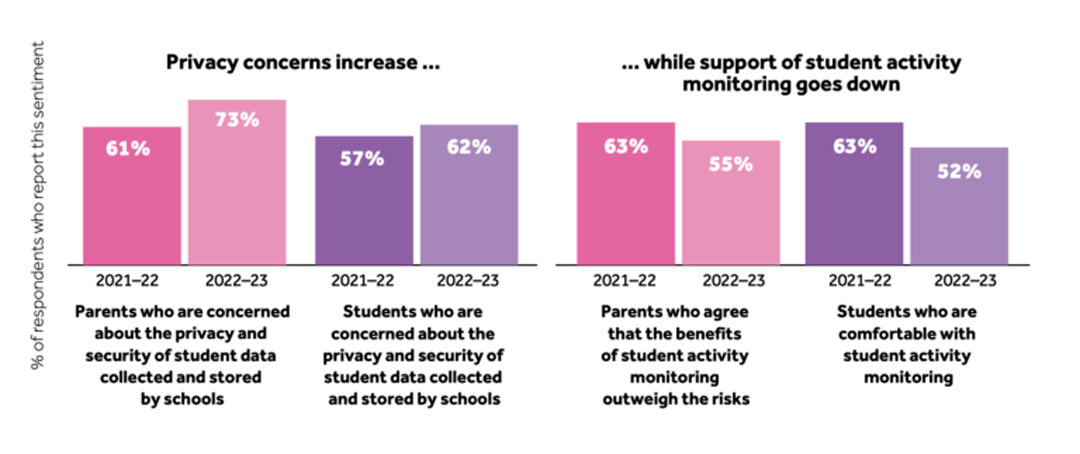

Among parents, 73% said they’re concerned about the privacy and security of student data collected and stored by schools, a considerable increase from the 61% who expressed those reservations the previous year. A similar if less dramatic trend was apparent among students: 62% had data privacy concerns tied to their schools, compared with 57% just a year earlier.

The Center for Democracy and Technology

Digital surveillance technology can pose disparate impacts on students; parent support for it is also waning

A bar chart outlining personal privacy concerns of polled students and parents due to AI

Rising levels of anxiety, researchers theorized, are likely the result of the growing frequency of cyberattacks on schools, which have become a primary target for ransomware gangs. High-profile breaches, including in Los Angeles and Minneapolis, have compromised a massive trove of highly sensitive student records. Exposed records, investigative reporting by The 74 has found, include student psychological evaluations, reports detailing campus rape cases, student disciplinary records, closely guarded files on campus security, employees’ financial records, and copies of government-issued identification cards.

Survey results found that students in special education, whose records are among the most sensitive that districts maintain, and their parents were significantly more likely than the general education population to report school data privacy and security concerns. As attacks ratchet up, 1 in 5 parents say they’ve been notified that their child’s school experienced a data breach. Such breach notices, Laird said, led to heightened apprehension.

“There’s not a lot of transparency” about school cybersecurity incidents “because there’s not an affirmative reporting requirement for schools,” Laird said. But in instances where parents are notified of breaches, “they are more concerned than other parents about student privacy.”

Parents and students have also grown increasingly wary of another set of education tools that rely on artificial intelligence: digital surveillance technology. Among them are student activity monitoring tools, such as those offered by the for-profit companies Gaggle and GoGuardian, which rely on algorithms in an effort to keep students safe. The surveillance software employs artificial intelligence to sift through students’ online activities and flag school administrators — and sometimes the police — when they discover materials related to sex, drugs, violence, or self-harm.

Among parents surveyed this year, 55% said they believe the benefits of activity monitoring outweigh the potential harms, down from 63% last year. Among students, 52% said they’re comfortable with academic activity monitoring, a decline from 63% last year.

Such digital surveillance, researchers found, frequently has disparate impacts on students based on their race, disability, sexual orientation, and gender identity, potentially violating longstanding federal civil rights laws.

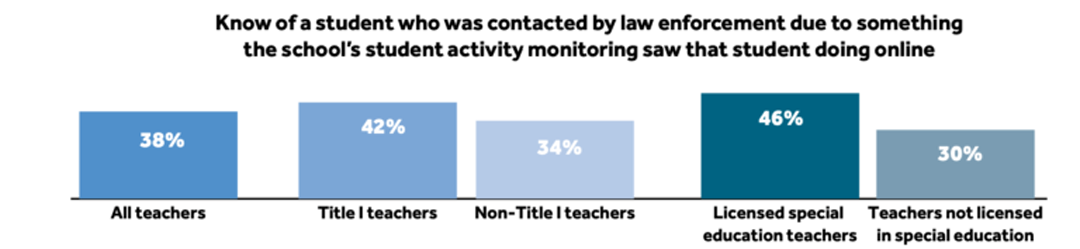

The tools also extend far beyond the school realm, with 40% of teachers reporting their schools monitor students’ personal devices. More than a third of teachers say they know a student who was contacted by the police because of online monitoring, the survey found, and Black parents were significantly more likely than their white counterparts to fear that information gleaned from online monitoring tools and AI-equipped campus surveillance cameras could fall into the hands of law enforcement.

The Center for Democracy and Technology

More than 1 in 3 teachers know of a student whose online activity has drawn the attention of law enforcement; meanwhile, digital filtering presents a negative academic impact

A bar chart showing the percentage of polled teachers who know of a student whose online activity has drawn the attention of law enforcement

Meanwhile, as states nationwide pull literature from school library shelves amid a conservative crusade against LGBTQ+ rights, the nonprofit argues that digital tools that filter and block certain online content “can amount to a digital book ban.” Nearly three-quarters of students — and disproportionately LGBTQ+ youth — said that web filtering tools have prevented them from completing school assignments.

The nonprofit highlights how disproportionalities identified in the survey could run counter to federal laws that prohibit discrimination based on race and sex, and those designed to ensure equal access to education for children with disabilities. In a letter sent on September 20 to the White House and Education Secretary Miguel Cardona, the Center for Democracy and Technology was joined by a coalition of civil rights groups urging federal officials to take a harder tack on ed tech practices that could threaten students’ civil rights.

“Existing civil rights laws already make schools legally responsible for their own conduct, and that of the companies acting at their direction in preventing discriminatory outcomes on the basis of race, sex and disability,” the coalition wrote. “The department has long been responsible for holding schools accountable to these standards.”

This story was produced by The 74, a nonprofit news organization covering America’s education system from early childhood through college and career, and reviewed and distributed by Stacker Media.